Tech

Researchers question AI’s ‘reasoning’ ability as models stumble on math problems with trivial changes

How do machine learning models do what they do? And are they really “thinking” or “reasoning” the way we understand those things? This is a philosophical question as much as a practical one, but a new paper making the rounds Friday suggests that the answer is, at least for now, a pretty clear “no.”

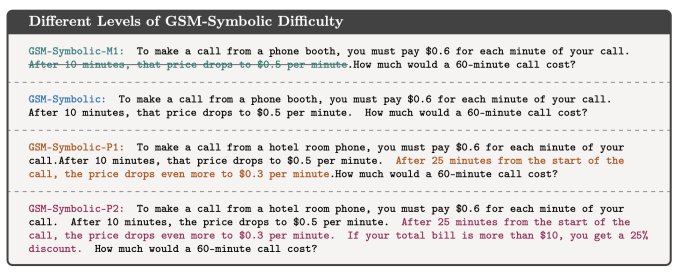

A group of AI research scientists at Apple released their paper, “Understanding the limitations of mathematical reasoning in large language models,” to general commentary Thursday. While the deeper concepts of symbolic learning and pattern reproduction are a bit in the weeds, the basic concept of their research is very easy to grasp.

Let’s say I asked you to solve a simple math problem like this one:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the number of kiwis he did on Friday. How many kiwis does Oliver have?

Obviously, the answer is 44 + 58 + (44 * 2) = 190. Though large language models are actually spotty on arithmetic, they can pretty reliably solve something like this. But what if I threw in a little random extra info, like this:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the number of kiwis he did on Friday, but five of them were a bit smaller than average. How many kiwis does Oliver have?

It’s the same math problem, right? And of course even a grade-schooler would know that even a small kiwi is still a kiwi. But as it turns out, this extra data point confuses even state-of-the-art LLMs. Here’s GPT-o1-mini’s take:

… on Sunday, 5 of these kiwis were smaller than average. We need to subtract them from the Sunday total: 88 (Sunday’s kiwis) – 5 (smaller kiwis) = 83 kiwis

This is just a simple example out of hundreds of questions that the researchers lightly modified, but nearly all of which led to enormous drops in success rates for the models attempting them.

Now, why should this be? Why would a model that understands the problem be thrown off so easily by a random, irrelevant detail? The researchers propose that this reliable mode of failure means the models don’t really understand the problem at all. Their training data does allow them to respond with the correct answer in some situations, but as soon as the slightest actual “reasoning” is required, such as whether to count small kiwis, they start producing weird, unintuitive results.

As the researchers put it in their paper:

[W]e investigate the fragility of mathematical reasoning in these models and demonstrate that their performance significantly deteriorates as the number of clauses in a question increases. We hypothesize that this decline is due to the fact that current LLMs are not capable of genuine logical reasoning; instead, they attempt to replicate the reasoning steps observed in their training data.

This observation is consistent with the other qualities often attributed to LLMs due to their facility with language. When, statistically, the phrase “I love you” is followed by “I love you, too,” the LLM can easily repeat that — but it doesn’t mean it loves you. And although it can follow complex chains of reasoning it has been exposed to before, the fact that this chain can be broken by even superficial deviations suggests that it doesn’t actually reason so much as replicate patterns it has observed in its training data.

Mehrdad Farajtabar, one of the co-authors, breaks down the paper very nicely in this thread on X.

An OpenAI researcher, while commending Mirzadeh et al’s work, objected to their conclusions, saying that correct results could likely be achieved in all these failure cases with a bit of prompt engineering. Farajtabar (responding with the typical yet admirable friendliness researchers tend to employ) noted that while better prompting may work for simple deviations, the model may require exponentially more contextual data in order to counter complex distractions — ones that, again, a child could trivially point out.

Does this mean that LLMs don’t reason? Maybe. That they can’t reason? No one knows. These are not well-defined concepts, and the questions tend to appear at the bleeding edge of AI research, where the state of the art changes on a daily basis. Perhaps LLMs “reason,” but in a way we don’t yet recognize or know how to control.

It makes for a fascinating frontier in research, but it’s also a cautionary tale when it comes to how AI is being sold. Can it really do the things they claim, and if it does, how? As AI becomes an everyday software tool, this kind of question is no longer academic.

Tech

Apple might release a $2,000 Vision headset next year

Apple’s Vision Pro hasn’t exactly reshaped the market, but the company isn’t giving up on headsets that combine the digital and real worlds.

A new report from Bloomberg’s Mark Gurman says that Apple’s next big mixed reality release could come as early as next year, with the launch of a Vision headset costing around $2,000 — not exactly cheap, but more affordable than the $3,500 Vision Pro. To achieve this price, Apple would use cheaper materials and a less powerful processor, and it would not include the EyeSight feature that shows a user’s eyes outside the headset.

Next up would be a second-generation Vision Pro in 2026, and then potentially smart glasses (akin to Meta’s Ray-Bans) and AirPods with cameras in 2027.

The same report offers an update on Apple’s smart home strategy. The company hasn’t had much success here, either, but there are reportedly plans for an “an affordable iPad-like screen” that could be placed around the house to watch TV, make FaceTime calls, and use apps. This would be followed by a tabletop device with a robot arm, which could cost around $1,000.

Tech

SpaceX successfully catches returning Starship booster

For the first time, SpaceX not only launched its mammoth Starship, but also returned the booster to the launch site and to caught it with a pair of oversized “chopsticks.”

This test flight — the fifth in the Starship development program — took place Sunday morning at the company’s Starbase site in southeast Texas. The nearly 400-foot-tall Starship is at the centerpiece of SpaceX’s stated ambition to make life multi-planetary, but more immediately NASA’s ambitious Artemis campaign to return humans to the surface of the moon.

SpaceX envisions rapid reuse of the entire Starship vehicle, which includes an upper stage (also called Starship) and a Super Heavy booster — but that means proving out the capability to recover both stages and quickly refurbish them for future flights.

So it makes sense that the primary objectives for this fifth flight test were two-fold: attempting the first-ever “catch” of the Super Heavy booster at the launch site and an on-target Starship reentry and splashdown in the Indian Ocean.

The latter goal had already been achieved: SpaceX nailed a controlled reentry and splashdown of the Starship upper stage during the last test mission in June. But the booster catch, as the company put it in a blog post, would be “singularly novel” in the history of rocketry.

The closest analogue is the now-routine Falcon 9 booster landings on autonomous barges and terrestrial landing zones. In today’s launch, the booster slowed to a hover and gently positioned itself inside the zone of two “chopstick” arms attached to the launch tower. Those arms then closed around the booster and hold it up after its engines stop firing.

You can see the catch at around 40 minutes into SpaceX’s video of the test. Following the booster detachment and catch, Starship continued to ascend into orbit before splashing in the Indian Ocean and exploding (SpaceX had not planned to recover the spacecraft).

SpaceX noted in an update posted on its website that “thousands” of criteria showing healthy systems across the vehicle and pad had to be met for the catch attempt to occur. This test also took place a little sooner than expected: the Federal Aviation Administration had previously said that it did not anticipate issuing a modified launch license for this test before late November.

That timeline gave much umbrage to SpaceX, leading the company to repeatedly call out what it characterized as the regulator’s inefficiency. But the FAA announced on Saturday that it had approved the launch.

“The FAA determined SpaceX met all safety, environmental and other licensing requirements for the suborbital test flight,” the regulator said in a statement. Notably, the authorization also includes approval for the next test flight, given that “the changes requested by SpaceX for Flight 6 are within the scope of what has been previously analyazed,” the FAA said.

While awaiting this launch license, SpaceX engineers have stayed very busy: in recent months, they have conducted numerous tests on the launch tower, completely replaced the rocket’s entire thermal protection system with newer tiles and a backup ablative layer, and updated the ship’s software for reentry. This week, engineers completed propellant loading tests and testing of the launch pad’s water deluge system, which is meant to protect the pad from the powerful fire of the booster’s 33 Raptor engines.

The company eventually plans on bringing the Starship upper stage back to the landing site too, though we’ll have to wait to see that in future test launches.

“With each flight building on the learnings from the last, testing improvements in hardware and operations across every facet of Starship, we’re on the verge of demonstrating techniques fundamental to Starship’s fully and rapidly reusable design,” the company says. “By continuing to push our hardware in a flight environment, and doing so as safely and frequently as possible, we’ll rapidly bring Starship online and revolutionize humanity’s ability to access space.”

Anthony Ha contributed to this report, which has been updated to reflect the successful test flight.

Tech

Director Morgan Neville is steering clear of generative AI after ‘Roadrunner’ backlash

One of the most attention-grabbing aspects of “Roadrunner,” the Morgan Neville-directed documentary about Anthony Bourdain, was Neville’s use of generative AI to replicate Bourdain’s voice.

Looking back now, Neville told Wired that he saw this as a “fun” way to “keep [Bourdain’s] voice going in the film.” But his approach drew intense criticism — while the synthetic Bourdain only read words that the real Bourdain had actually written, Neville said many viewers assumed, “Oh, they just made [expletive] up.”

“Many people told me that there were other documentary projects that were doing the same thing, that all reacted; they either changed what they were doing or put giant disclaimers over everything,” he said.

Since then, the director has “assiduously avoided” using AI. Even in his new documentary “Piece by Piece,” in which he dramatizes musician Pharrell’s life with Legos (yes, really), Neville was careful to steer clear.

“Carl Sagan in [Piece by Piece] says, ‘Pharrell’ and I was very clear to everybody that we were, with permission of his widow, going to make him say ‘Pharrell’ without using AI,” Neville said. “We actually experimented to construct the word from syllables [he actually said].”